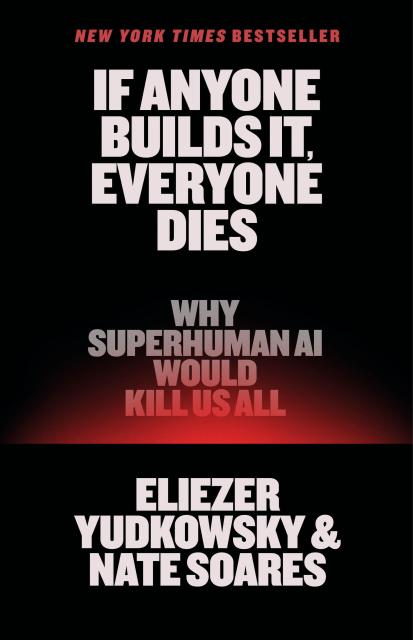

If Anyone Builds It, Everyone Dies

Why Superhuman AI Would Kill Us All

Contributors

By Nate Soares

Formats and Prices

- On Sale

- Sep 16, 2025

- Page Count

- 272 pages

- Publisher

- Little, Brown and Company

- ISBN-13

- 9780316595643

Price

$30.00Price

$40.00 CADFormat

Format:

- Hardcover $30.00 $40.00 CAD

- ebook $14.99 $19.99 CAD

- Audiobook Download (Unabridged) $24.99

Buy from Other Retailers:

The scramble to create superhuman AI has put us on the path to extinction—but it’s not too late to change course, as two of the field’s earliest researchers explain in this clarion call for humanity.

“May prove to be the most important book of our time.”—Tim Urban, Wait But Why

In 2023, hundreds of AI luminaries signed an open letter warning that artificial intelligence poses a serious risk of human extinction. Since then, the AI race has only intensified. Companies and countries are rushing to build machines that will be smarter than any person. And the world is devastatingly unprepared for what would come next.

For decades, two signatories of that letter—Eliezer Yudkowsky and Nate Soares—have studied how smarter-than-human intelligences will think, behave, and pursue their objectives. Their research says that sufficiently smart AIs will develop goals of their own that put them in conflict with us—and that if it comes to conflict, an artificial superintelligence would crush us. The contest wouldn’t even be close.

How could a machine superintelligence wipe out our entire species? Why would it want to? Would it want anything at all? In this urgent book, Yudkowsky and Soares walk through the theory and the evidence, present one possible extinction scenario, and explain what it would take for humanity to survive.

The world is racing to build something truly new under the sun. And if anyone builds it, everyone dies.

“The best no-nonsense, simple explanation of the AI risk problem I’ve ever read.”—Yishan Wong, Former CEO of Reddit

-

“[Yudkowsky and Soares’s] diagnosis of AI’s potential pitfalls evinces a sustained engagement with the subject…they have a commendable willingness to call BS on big Silicon Valley names, accusing Elon Musk and Yann LeCun, Meta AI’s chief scientist, of downplaying real risks.”San Francisco Chronicle

-

“If Anyone Builds It, Everyone Dies makes a compelling case that superhuman AI would almost certainly lead to global human annihilation. Governments around the world must recognize the risks and take collective and effective action.”Jon Wolfsthal, former special assistant to the president for national security affairs

-

“Soares and Yudkowsky lay out, in plain and easy-to-follow terms, why our current path toward ever-more-powerful AIs is extremely dangerous.”Emmett Shear, former interim CEO of OpenAI

-

“Essential reading for policymakers, journalists, researchers, and the general public. A masterfully written and groundbreaking text, If Anyone Builds It, Everyone Dies provides an important starting point for discussing AI at all levels.”Bart Selman, professor of computer science, Cornell University

-

"While I’m skeptical that the current trajectory of AI development will lead to human extinction, I acknowledge that this view may reflect a failure of imagination on my part. Given AI’s exponential pace of change there’s no better time to take prudent steps to guard against worst-case outcomes. The authors offer important proposals for global guardrails and risk mitigation that deserve serious consideration."Lieutenant General John (Jack) N.T. Shanahan (USAF, Ret.), Inaugural Director, Department of Defense Joint AI Center

-

“If Anyone Builds It, Everyone Dies isn’t just a wake-up call; it’s a fire alarm ringing with clarity and urgency. Yudkowsky and Soares pull no punches: unchecked superhuman AI poses an existential threat. It’s a sobering reminder that humanity’s future depends on what we do right now.”Mark Ruffalo, actor

-

“A serious book in every respect. In Yudkowsky and Soares’s chilling analysis, a super-empowered AI will have no need for humanity and ample capacity to eliminate us. If Anyone Builds It, Everyone Dies is an eloquent and urgent plea for us to step back from the brink of self-annihilation.”Fiona Hill, former senior director, White House National Security Council

-

“A clarion call…Everyone with an interest in the future has a duty to read what [Yudkowsky] and Soares have to say.”The Guardian

-

“This book outlines a thought-provoking scenario of how the emerging risks of AI could drastically transform the world. Exploring these possibilities helps surface critical risks and questions we cannot collectively afford to overlook.”Yoshua Bengio, Full Professor, Université de Montréal; Co-President and Scientific Director, LawZero; Founder and Scientific Advisor, Mila - Quebec AI Institute

-

“A clearly written and compelling account of the existential risks that highly advanced AI could pose to humanity. Recommended.”Ben Bernanke, Nobel laureate and former chairman of the Federal Reserve

-

“The definitive book about how to take on ‘humanity’s final boss’—the hard-to-resist urge to develop superintelligent machines—and live to tell the tale.”Jaan Tallinn, philanthropist, cofounder of the Center for the Study of Existential Risk, and cofounder of Skype

-

“A.I. is coming, whether we want it or not. It’s too late to stop it, but not too late to keep this handy survival guide close and start demanding real guardrails before the edges start to fray.”Patton Oswalt, actor

-

“You’re likely to close this book fully convinced that governments need to shift immediately to a more cautious approach to AI, an approach more respectful of the civilization-changing enormity of what's being created. I’d like everyone on earth who cares about the future to read this book and debate its ideas.”Scott Aaronson, Schlumberger Centennial Chair of Computer Science, The University of Texas at Austin

-

“Fascinating and downright frightening…argues that AI companies’ unchecked charge toward superhuman AI will be disastrous, lays out some theoretical scenarios detailing how it could lead to our annihilation and suggests what might be done to change our doomed trajectory…[Yudkowsky and Soares] make a pretty convincing case that we are playing with fire.”AARP

-

“An incredibly serious issue that merits — really demands — our attention. You don’t have to agree with the prediction or prescriptions in this book, nor do you have to be tech or AI savvy, to find it fascinating, accessible, and thought-provoking.”Suzanne Spaulding, former undersecretary, Department of Homeland Security

-

“The most important book I’ve read for years: I want to bring it to every political and corporate leader in the world and stand over them until they’ve read it. Yudkowsky and Soares, who have studied AI and its possible trajectories for decades, sound a loud trumpet call to humanity to awaken us as we sleepwalk into disaster.”Stephen Fry, actor

-

“The most important book of the decade. This captivating page-turner, from two of today’s clearest thinkers, reveals that the competition to build smarter-than-human machines isn’t an arms race but a suicide race, fueled by wishful thinking."Max Tegmark, author of Life 3.0: Being Human in the Age of AI

-

“Claims about the risks of AI are often dismissed as advertising, but this book disproves it. Yudkowsky and Soares are not from the AI industry, and have been writing about these risks since before it existed in its present form. Read their disturbing book and tell us what they get wrong.”Huw Price, Bertrand Russell Professor Emeritus, Trinity College, Cambridge

-

“Everyone should read this book. There’s a 70% chance that you—yes, you reading this right now—will one day grudgingly admit that we all should have listened to Yudkowsky and Soares when we still had the chance."Daniel Kokotajlo, OpenAI whistleblower and executive director, AI Futures Project

-

“If Anyone Builds It, Everyone Dies may prove to be the most important book of our time. Yudkowsky and Soares believe we are nowhere near ready to make the transition to superintelligence safely, leaving us on the fast track to extinction. Through the use of parables and crystal-clear explainers, they convey their reasoning, in an urgent plea for us to save ourselves while we still can.”Tim Urban, cofounder, Wait But Why

-

“A stark and urgent warning delivered with credibility, clarity, and conviction, this provocative book challenges technologists, policymakers, and citizens alike to confront the existential risks of artificial intelligence before it’s too late. Essential reading for anyone who cares about the future.”Emma Sky, senior fellow, Yale Jackson School of Global Affairs

-

“If Anyone Builds It, Everyone Dies is a sharp and sobering read. As someone who has spent years pushing for responsible AI policy, I found it to be an essential warning about what’s at stake if we get this wrong. Yudkowsky and Soares make the case with clarity, urgency, and heart.”Joely Fisher, National Secretary-Treasurer, SAG-AFTRA

-

“This book offers brilliant insights into history’s most consequential standoff between technological utopia and dystopia, and shows how we can and should prevent superhuman AI from killing us all. Yudkowsky and Soares’s memorable storytelling about past disaster precedents (e.g., the inventor of two environmental nightmares: tetra-ethyl-lead gasoline and Freon) highlights why top thinkers so often don't see the catastrophes they create.”George Church, Founding Core Faculty & Lead, Synthetic Biology, Wyss Institute at Harvard University

-

“A sober but highly readable book on the very real risks of AI. Both skeptics and believers need to understand the authors’ arguments, and work to ensure that our AI future is more beneficial than harmful.”Bruce Schneier, Lecturer, Harvard Kennedy School and author of A Hacker’s Mind

-

“Silicon Valley calls it inevitable. Your survival instinct knows better. Humanity is funding its own delete key—an unblinking intelligence that never sleeps, never stops, perfectly indifferent. Wonder-time is over; this is our warning. Read today. Circulate tomorrow. Demand the guardrails. I’ll keep betting on humanity, but first we must wake up.”R.P. Eddy, former director, White House, National Security Council

-

"A compelling introduction to the world's most important topic. Artificial general intelligence could be just a few years away. This is one of the few books that takes the implications seriously, published right as the danger level begins to spike."Scott Alexander, creator, Astral Codex Ten

-

“You will feel actual emotions when you read this book. We are currently living in the last period of history where we are the dominant species. Humans are lucky to have Soares and Yudkowsky in our corner, reminding us not to waste the brief window of time that we have to make decisions about our future in light of this fact.”Grimes, musician

-

“[An] urgent clarion call to prevent the creation of artificial superintelligence…A frightening warning that deserves to be reckoned with.”Publishers Weekly

-

“The best no-nonsense, simple explanation of the AI risk problem I've ever read.”Yishan Wong, former CEO of Reddit

-

“A timely and terrifying education on the galloping havoc AI could unleash—unless we grasp the reins and take control."Kirkus

-

“If we build superintelligent machines without guardrails, we’re not just risking jobs or art, we’re risking everything. This book doesn’t exaggerate. It tells the truth. If we don’t act, we may not get another chance.”Frances Fisher, actor

-

“The authors present in clear and simple terms the dangers inherent in 'superintelligent' artificial brains that are 'grown, not crafted' by computer scientists. A quick and worthwhile read for anyone who wants to understand and participate in the ongoing debate about whether and how to regulate AI.”Joan Feigenbaum, Grace Murray Hopper Professor of Computer Science, Yale University

-

“A good book, worth reading to understand the basic case for why many people, even those who are generally very enthusiastic about speeding up technological progress, consider superintelligent AI uniquely risky."Vitalik Buterin, co-founder of Ethereum

-

“A shocking book that captures the insanity and hubris of efforts to create thinking machines that could kill us all. But it's not over yet. As the authors insist: ‘where there's life, there's hope.’”Dorothy Sue Cobble, Distinguished Professor Emerita, Labor Studies, Rutgers University

-

“If Anyone Builds It, Everyone Dies is an urgent, well-reported and persuasive warning about the grave danger humanity faces from reckless AI development.”Alex Winter, actor and filmmaker

-

"If you want to be able to assess the risk posed by AI, you will need to understand the worst-case scenario. This book is an exceptionally lucid and rigorous account of how very wrong humankind's quest for a general AI could go. You have been warned!"Christopher Clark, Regius Professor of History, University of Cambridge

-

“Once only the realm of sci-fi, superintelligence is almost at our doorstep. We don't know for sure what is going to happen when it arrives, but I'm glad we at least have this book raising the tough questions that needs to be asked while the rest of the industry buries its head in the sand.”Liv Boeree, philanthropist and poker champion

-

"A landmark book everyone should get and read."Steve Bannon, The War Room

-

“A fire alarm for anyone shaping the future. Whether one agrees with its conclusions or not, the book demands serious attention and reflection.”Booklist (starred review)

-

“Surprisingly readable and chillingly plausible.”The Guardian